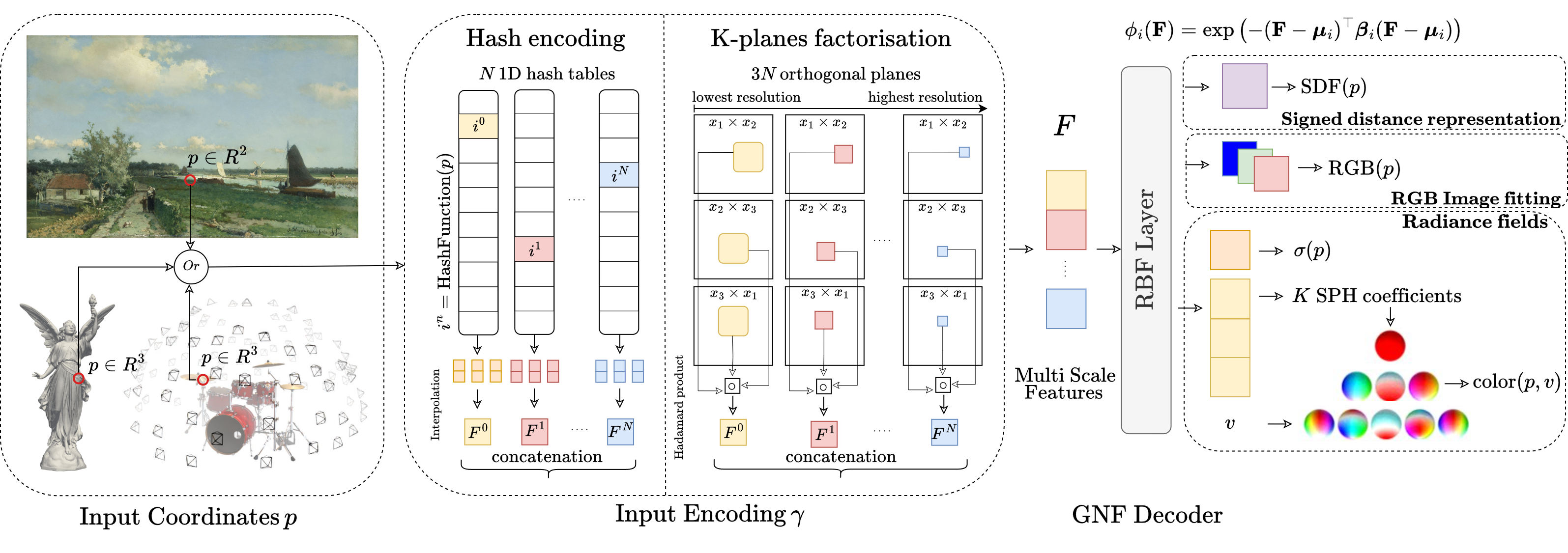

We introduce GNF, a novel framework that leverages Gaussian Radial basis Functions to represent neural fields efficiently. We demonstrate its effectiveness in representing 3D geometry, RGB images, and radiance fields.

In this paper, we introduce Gaussian Neural Fields (GNF), a novel lightweight decoder based on Radial Basis Functions (RBFs). Instead of using traditional MLPs—which approximate nonlinear functions via piecewise linear activations (e.g. ReLU)—our method leverages smooth Gaussian kernels defined directly in the learned feature space. Each radial unit computes its activation from the Euclidean distance between the input feature and a learned center, with per-dimension scaling enabling anisotropic and localized modeling in feature space. By aligning kernel dimensionality with the learned input features, GNF effectively represents highly nonlinear signals with a single decoding layer consisting of fewer than 64 units—significantly more compact than shallow three-layer MLPs used in state-of-the-art methods. This minimal architectural depth shortens gradient paths, accelerating training and inference. Additionally, our fully vectorized implementation reduces computational complexity from O(BN²) to O(BN), where B is the batch size and N is the number of decoding units. As a result, GNF achieves comparable reconstruction quality using only about 78% of the floating-point operations (FLOPs) required by a three-layer MLP.

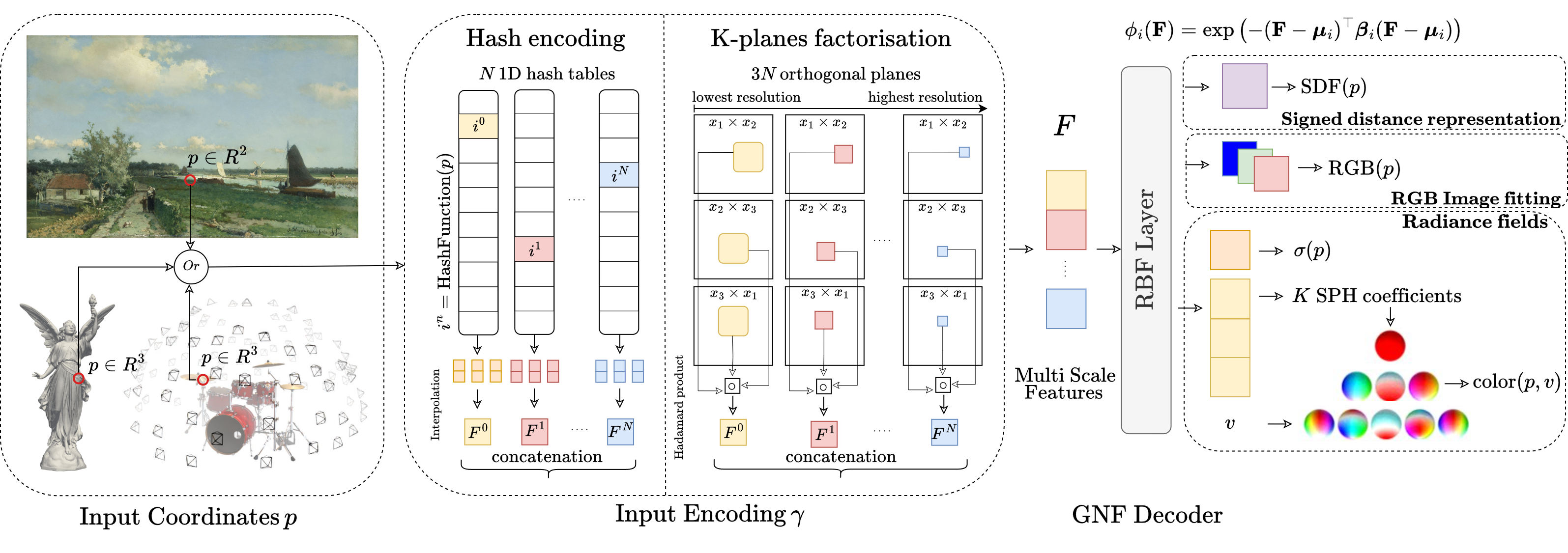

Our model (third figure from the left) stands out with its single decoding layer compared to other neural field models.

The meshes below show the zero-level sets of the Signed Distance Function (SDF) estimated by our model and the DiF-Grid model from FactorFields [1]. Our model uses approximately 3.5 million parameters and converges in 15 seconds, compared to 5 million parameters and 20 seconds for DiF-Grid [1]. While both models produce comparable results, ours captures finer scene details with fewer parameters and faster convergence. For each shape, corresponding views are aligned to allow a direct comparison.

The meshes below show the zero-level sets of the Signed Distance Function (SDF) estimated by our model and the NeuRBF model [2]. Our model converges in 15 seconds, compared to 60 seconds for NeuRBF [2]. While both models produce comparable results. For each shape, corresponding views are aligned to allow a direct comparison.

The video below presents the extracted mesh during our model's training, with the training timer displayed in the bottom-left corner.

The overall shape is captured within a few seconds.

We use our model with a single RBF layer to fit high-resolution images. See examples below.

Zoom-in visualization of reconstructed images generated by our model.

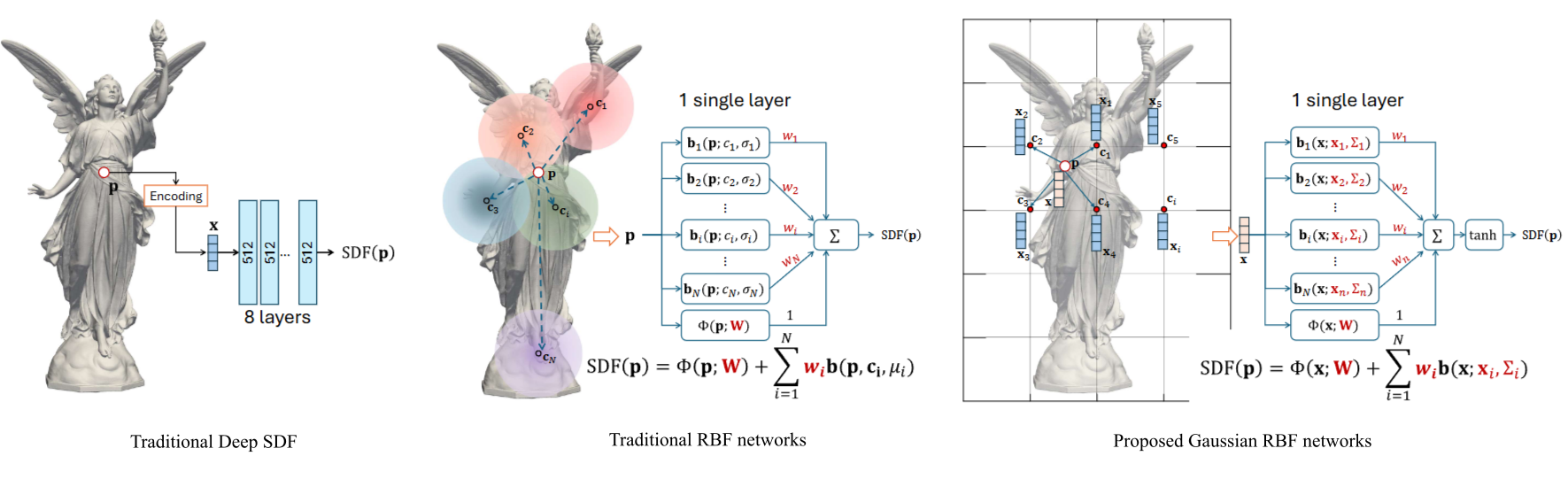

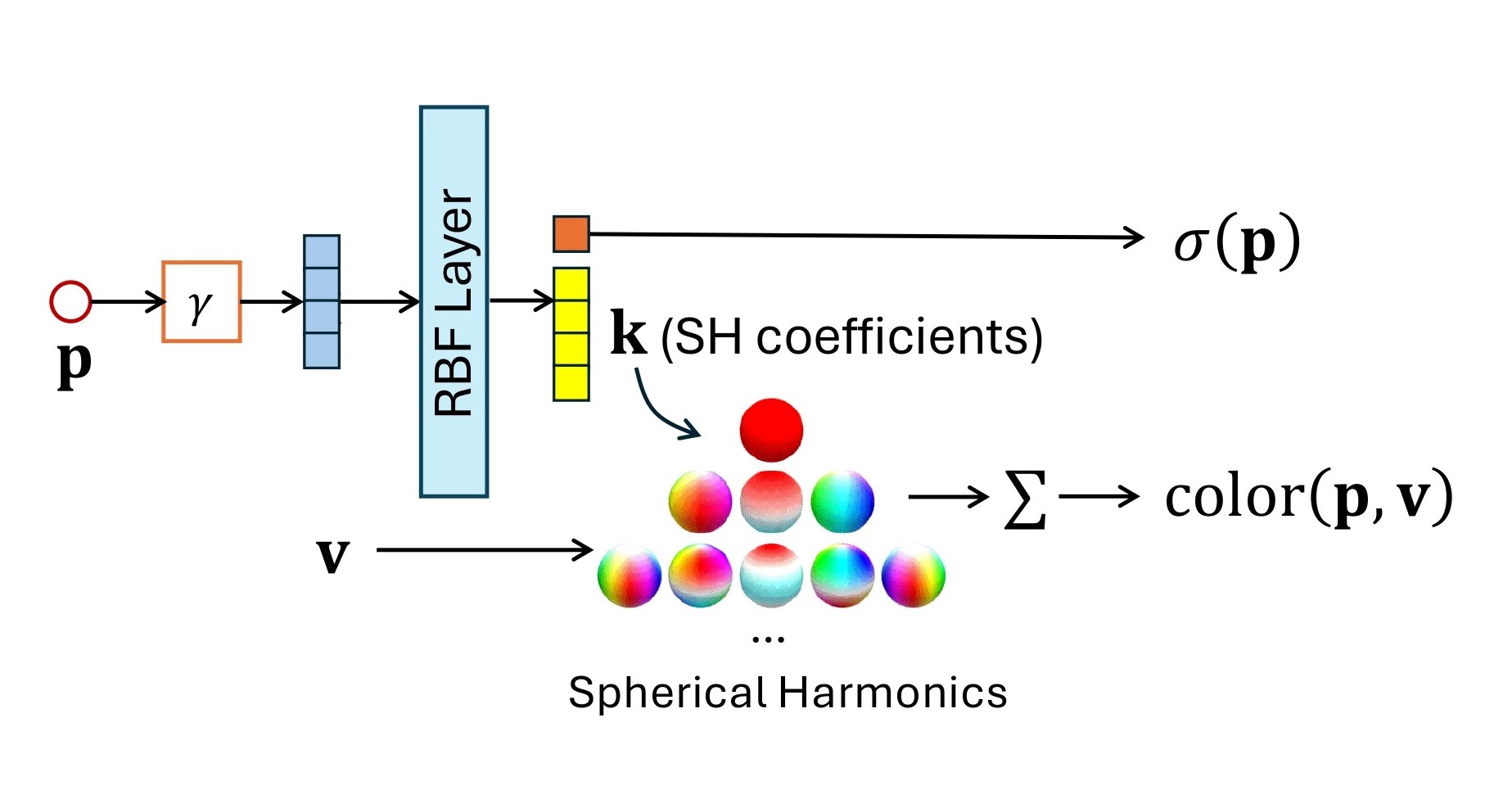

We extend our framework to support a higher number of outputs, enabling the estimation of spherical harmonics for novel view generation. The proposed GNF-SH model architecture is illustrated in the figure below.

Network architecture of the radiance fields model.